Watch this video which I recorded two years ago while travelling in Madagascar:

If it looks crazy, that’s because it is.

As a tourist, you will almost always rent a car with a driver. You don’t know the rules. It may seem that there aren’t any rules. But after having been in and watched the traffic for dozens of hours, I realized that there are rules but not in the sense of road traffic reguations but rather unwritten rules that most drivers will follow:

- Drive when it’s clear.

There are almost no traffic lights or street signs. No right of way rules (at least none that anyone follows). If you can, you drive. If you’re first, you get to go and others will yield. That’s sometimes a matter of millimeters but it usually works out. Everybody does it and nobody gets mad (usually). - Don’t kill (or hurt) any people.

This seems self-explanatory but it goes both ways: Drivers will honk to let you know they’re coming. But if you don’t make way for them, you may get killed. At the same time, it is accepted that lots of people walk along the side of the road or walk inbetween cars when traffic moves slowly. - Don’t kill any animals.

That chicken running around belongs to somebody. If you run over it, a family might go hungry. If there’s a herd of cows in the street, drivers will wait patiently until they can keep going. Killing a cow can ruin someone’s life. - Don’t crash into other vehicles. (Also don’t damage your own vehicle.)

It’s clear that you don’t want to be in an accident. And as I mentioned above, your car may sometimes get extremely close to another car. But everyone will always try to avoid accidents. I couldn’t determine any hierarchy (e.g. trucks before cars, which can be seen in other countries). Everyone will push as much as they can but stop short of crashing into anyone.

These “rules” seem straightforward but they require experience, foresight, and a great sense of everything that’s going on around you. Take rule #2: People will often not realize you’re approaching them. So you honk to let them know. (Honking is not an act of aggression but rather a signal to other road users.) If a child walks along the road, you have to determine its approximate age. Kids 10 years and older will make way for you. But small children (e.g. five years and younger) may not so you’ll have to slow down and be ready to come to a full stop at any moment. If that small child holds their mother’s hand, however, you can rely on the mother to pull her child aside.

Adults will usually yield. But there was one case where our driver noticed a drunk man coming out of a bar. So he slowed down because he knew that drunk man may stagger into the street at any time. All of these situations made me think: How could a self-driving system make any of these assessments?

There is a lot more to driving than knowing where things are. It’s psychology. You have to be able to predict how other road users will behave and react appropriately. This requires reasoning about all events happening around you and that’s something that modern computer systems don’t seem to have mastered yet. Advanced deep learning models such as GPT-3 seem very advanced but they don’t reason about things, they just synthesize from the broad knowledge they store. And while the result is often eerily correct, it’s also wrong many times.

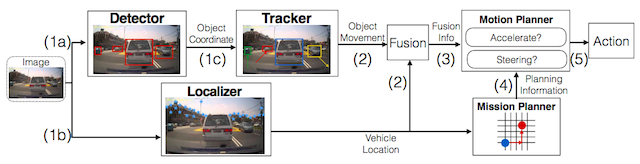

Have a look at the architecture of an autonomous driving system (taken from this paper):

(Update Sep 2021: More information about Tesla’s architecture can be found here.)

In this model, machine learning is at best applied to steps 1 and 2. A lot of work is being done improving the detection and tracking of objects and building a 3D model from that information. But deriving a strategic plan for when to turn, when to change lanes, when to slow down and when to accelerate, and so on, is still up to the algorithm developed by the teams at Waymo, Tesla, Uber, et al. And they don’t perform very well even in a much less chaotic environment than Madagascar:

If the system cannot even reliably determine that a car is parked and won’t move away, how can a system determine that the child is less than 5 years old and may behave erratically? Note that I’m picking situations here so don’t get hung up on that child example. Life is too multifaceted to add all possible situtations to the code and have the program make the right decision.

Until we find out how to make computers reason about and correctly predict the behaviour of people and that behaviour’s consequences, I don’t see Level 5 (“Full Automation”) driving happening.